Alongside has big plans to break negative cycles before they turn clinical, said Dr. Elsa Friis, a licensed psychologist for the company, whose background includes identifying autism, ADHD and suicide risk using Large Language Models (LLMs).

The Alongside app currently partners with more than 200 schools across 19 states, and collects student chat data for their annual youth mental health report — not a peer reviewed publication. Their findings this year, said Friis, were surprising. With almost no mention of social media or cyberbullying, the student users reported that their most pressing issues had to do with feeling overwhelmed, poor sleep habits and relationship problems.

Alongside boasts positive and insightful data points in their report and pilot study conducted earlier in 2025, but experts like Ryan McBain, a health researcher at the RAND Corporation, said that the data isn’t robust enough to understand the real implications of these types of AI mental health tools.

“If you’re going to market a product to millions of children in adolescence throughout the United States through school systems, they need to meet some minimum standard in the context of actual rigorous trials,” said McBain.

But underneath all of the report’s data, what does it really mean for students to have 24/7 access to a chatbot that is designed to address their mental health, social, and behavioral concerns?

What’s the difference between AI chatbots and AI companions?

AI companions fall under the larger umbrella of AI chatbots. And while chatbots are becoming more and more sophisticated, AI companions are distinct in the ways that they interact with users. AI companions tend to have less built-in guardrails, meaning they are coded to endlessly adapt to user input; AI chatbots on the other hand might have more guardrails in place to keep a conversation on track or on topic. For example, a troubleshooting chatbot for a food delivery company has specific instructions to carry on conversations that only pertain to food delivery and app issues and isn’t designed to stray from the topic because it doesn’t know how to.

But the line between AI chatbot and AI companion becomes blurred as more and more people are using chatbots like ChatGPT as an emotional or therapeutic sounding board. The people-pleasing features of AI companions can and have become a growing issue of concern, especially when it comes to teens and other vulnerable people who use these companions to, at times, validate their suicidality, delusions and unhealthy dependency on these AI companions.

A recent report from Common Sense Media expanded on the harmful effects that AI companion use has on adolescents and teens. According to the report, AI platforms like Character.AI are “designed to simulate humanlike interaction” in the form of “virtual friends, confidants, and even therapists.”

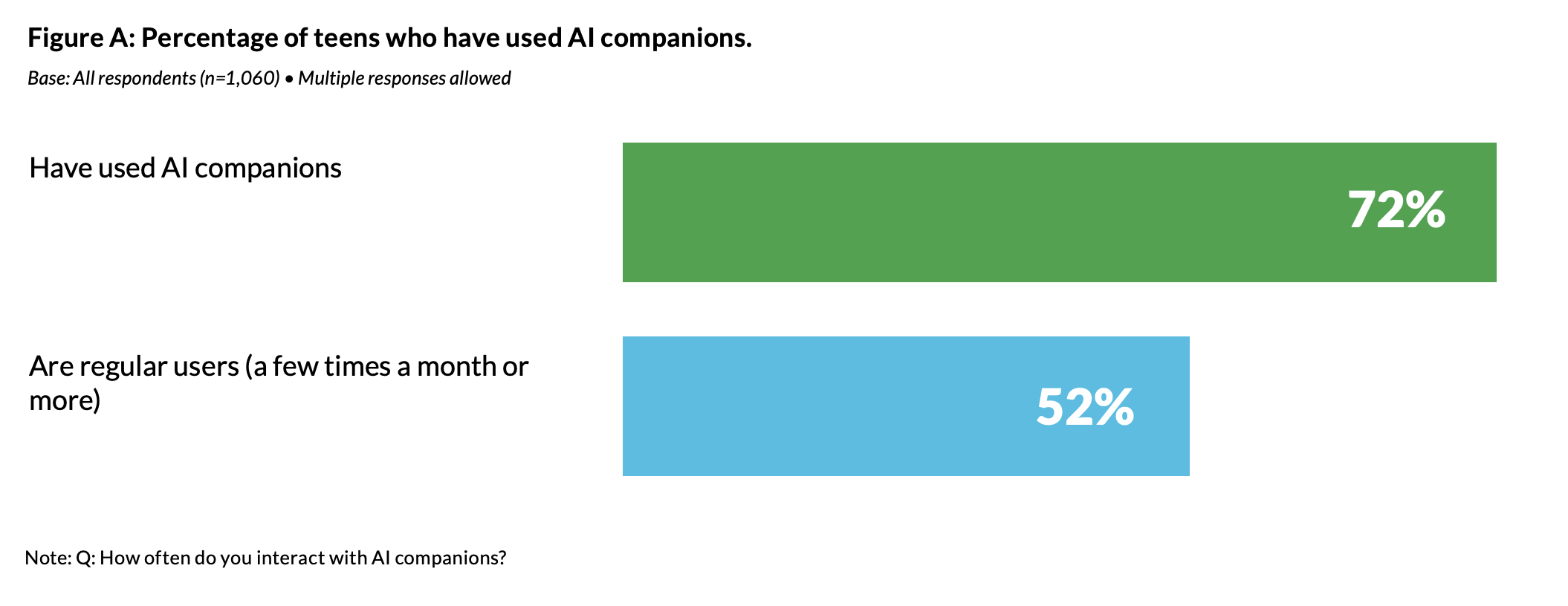

Although Common Sense Media found that AI companions “pose ‘unacceptable risks’ for users under 18,” young people are still using these platforms at high rates.

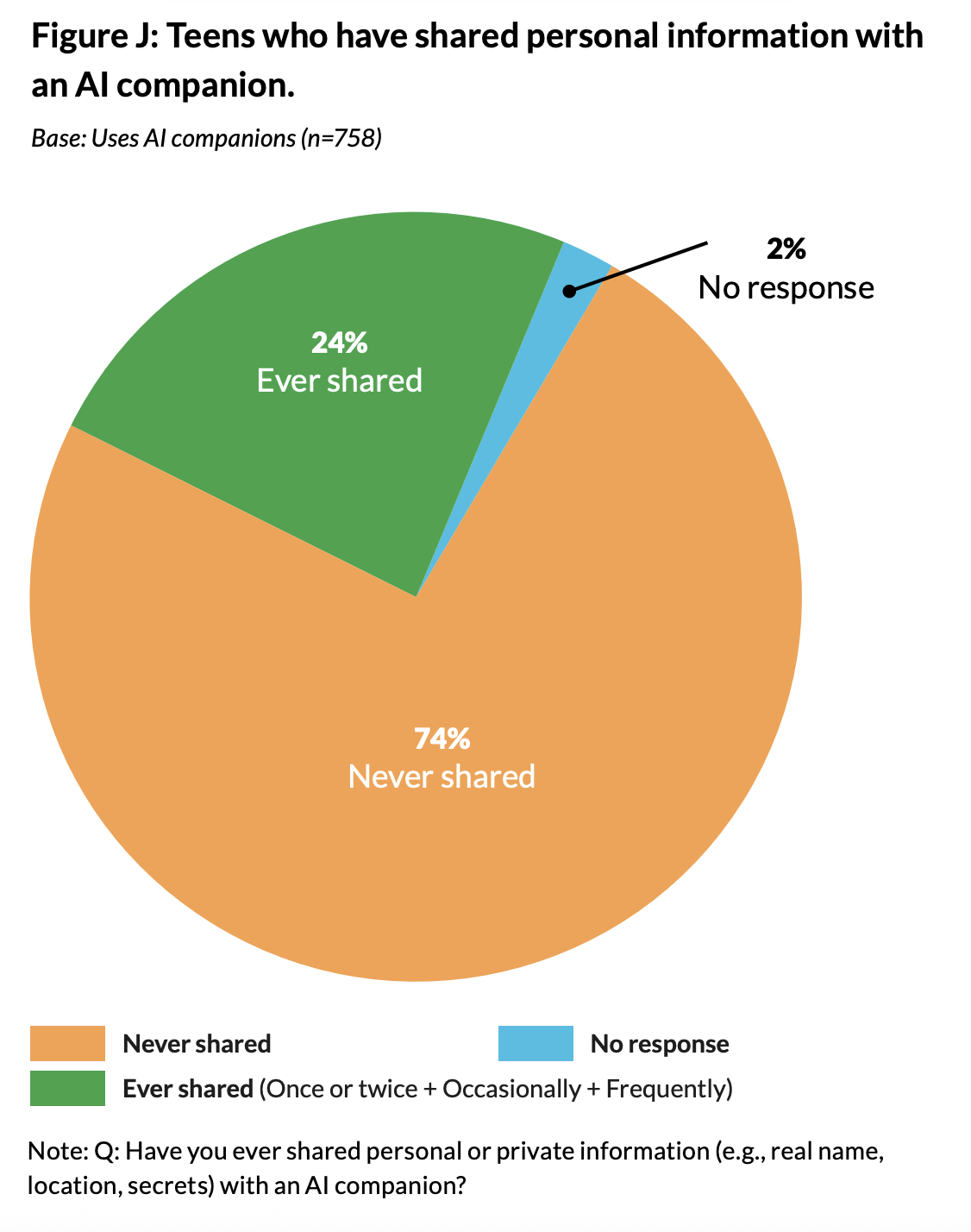

Seventy two percent of the 1,060 teens surveyed by Common Sense said that they had used an AI companion before, and 52% of teens surveyed are “regular users” of AI companions. However, for the most part, the report found that the majority of teens value human friendships more than AI companions, don’t share personal information with AI companions and hold some level of skepticism toward AI companions. Thirty nine percent of teens surveyed also said that they apply skills they practiced with AI companions, like expressing emotions, apologizing and standing up for themselves, in real life.

When comparing Common Sense Media’s recommendations for safer AI use to Alongside’s chatbot features, they do meet some of these recommendations — like crisis intervention, usage limits and skill-building elements. According to Mehta, there is a big difference between an AI companion and Alongside’s chatbot. Alongside’s chatbot has built-in safety features that require a human to review certain conversations based on trigger words or concerning phrases. And unlike tools like AI companions, Mehta continued, Alongside discourages student users from chatting too much.

One of the biggest challenges that chatbot developers like Alongside face is mitigating people-pleasing tendencies, said Friis, a defining characteristic of AI companions. Guardrails have been put into place by Alongside’s team to avoid people-pleasing, which can turn sinister. “We aren’t going to adapt to foul language, we aren’t going to adapt to bad habits,” said Friis. But it’s up to Alongside’s team to anticipate and determine which language falls into harmful categories including when students try to use the chatbot for cheating.

According to Friis, Alongside errs on the side of caution when it comes to determining what kind of language constitutes a concerning statement. If a chat is flagged, teachers at the partner school are pinged on their phones. In the meantime the student is prompted by Kiwi to complete a crisis assessment and directed to emergency service numbers if needed.

Addressing staffing shortages and resource gaps

In school settings where the ratio of students to school counselors is often impossibly high, Alongside acts as a triaging tool or liaison between students and their trusted adults, said Friis. For example, a conversation between Kiwi and a student might consist of back-and-forth troubleshooting about creating healthier sleeping habits. The student might be prompted to talk to their parents about making their room darker or adding in a nightlight for a better sleep environment. The student might then come back to their chat after a conversation with their parents and tell Kiwi whether or not that solution worked. If it did, then the conversation concludes, but if it didn’t then Kiwi can suggest other potential solutions.

According to Dr. Friis, a couple of 5-minute back-and-forth conversations with Kiwi, would translate to days if not weeks of conversations with a school counselor who has to prioritize students with the most severe issues and needs like repeated suspensions, suicidality and dropping out.

Using digital technologies to triage health issues is not a new idea, said RAND researcher McBain, and pointed to doctor wait rooms that greet patients with a health screener on an iPad.

“If a chatbot is a slightly more dynamic user interface for gathering that sort of information, then I think, in theory, that is not an issue,” McBain continued. The unanswered question is whether or not chatbots like Kiwi perform better, as well, or worse than a human would, but the only way to compare the human to the chatbot would be through randomized control trials, said McBain.

“One of my biggest fears is that companies are rushing in to try to be the first of their kind,” said McBain, and in the process are lowering safety and quality standards under which these companies and their academic partners circulate optimistic and eye-catching results from their product, he continued.

But there’s mounting pressure on school counselors to meet student needs with limited resources. “It’s really hard to create the space that [school counselors] want to create. Counselors want to have those interactions. It’s the system that’s making it really hard to have them,” said Friis.

Alongside offers their school partners professional development and consultation services, as well as quarterly summary reports. A lot of the time these services revolve around packaging data for grant proposals or for presenting compelling information to superintendents, said Friis.

A research-backed approach

On their website, Alongside touts research-backed methods used to develop their chatbot, and the company has partnered with Dr. Jessica Schleider at Northwestern University, who studies and develops single-session mental health interventions (SSI) — mental health interventions designed to address and provide resolution to mental health concerns without the expectation of any follow-up sessions. A typical counseling intervention is at minimum, 12 weeks long, so single-session interventions were appealing to the Alongside team, but “what we know is that no product has ever been able to really effectively do that,” said Friis.

However, Schleider’s Lab for Scalable Mental Health has published multiple peer-reviewed trials and clinical research demonstrating positive results for implementation of SSIs. The Lab for Scalable Mental Health also offers open source materials for parents and professionals interested in implementing SSIs for teens and young people, and their initiative Project YES offers free and anonymous online SSIs for youth experiencing mental health concerns.

“One of my biggest fears is that companies are rushing in to try to be the first of their kind,” said McBain, and in the process are lowering safety and quality standards under which these companies and their academic partners circulate optimistic and eye-catching results from their product, he continued.

What happens to a kid’s data when using AI for mental health interventions?

Alongside gathers student data from their conversations with the chatbot like mood, hours of sleep, exercise habits, social habits, online interactions, among other things. While this data can offer schools insight into their students’ lives, it does bring up questions about student surveillance and data privacy.

Alongside like many other generative AI tools uses other LLM’s APIs — or application programming interface — meaning they include another company’s LLM code, like that used for OpenAI’s ChatGPT, in their chatbot programming which processes chat input and produces chat output. They also have their own in-house LLMs which the Alongside’s AI team has developed over a couple of years.

Growing concerns about how user data and personal information is stored is especially pertinent when it comes to sensitive student data. The Alongside team have opted-in to OpenAI’s zero data retention policy, which means that none of the student data is stored by OpenAI or other LLMs that Alongside uses, and none of the data from chats is used for training purposes.

Because Alongside operates in schools across the U.S., they are FERPA and COPPA compliant, but the data has to be stored somewhere. So, student’s personal identifying information (PII) is uncoupled from their chat data as that information is stored by Amazon Web Services (AWS), a cloud-based industry standard for private data storage by tech companies around the world.

Alongside uses an encryption process that disaggregates the student PII from their chats. Only when a conversation gets flagged, and needs to be seen by humans for safety reasons, does the student PII connect back to the chat in question. In addition, Alongside is required by law to store student chats and information when it has alerted a crisis, and parents and guardians are free to request that information, said Friis.

Typically, parental consent and student data policies are done through the school partners, and as with any school services offered like counseling, there is a parental opt-out option which must adhere to state and district guidelines on parental consent, said Friis.

Alongside and their school partners put guardrails in place to make sure that student data is kept safe and anonymous. However, data breaches can still happen.

How the Alongside LLMs are trained

One of Alongside’s in-house LLMs is used to identify potential crises in student chats and alert the necessary adults to that crisis, said Mehta. This LLM is trained on student and synthetic outputs and keywords that the Alongside team enters manually. And because language changes often and isn’t always straight forward or easily recognizable, the team keeps an ongoing log of different words and phrases, like the popular abbreviation “KMS” (shorthand for “kill myself”) that they retrain this particular LLM to understand as crisis driven.

Although according to Mehta, the process of manually inputting data to train the crisis assessing LLM is one of the biggest efforts that he and his team has to tackle, he doesn’t see a future in which this process could be automated by another AI tool. “I wouldn’t be comfortable automating something that could trigger a crisis [response],” he said — the preference being that the clinical team led by Friis contribute to this process through a clinical lens.

But with the potential for rapid growth in Alongside’s number of school partners, these processes will be very difficult to keep up with manually, said Robbie Torney, senior director of AI programs at Common Sense Media. Although Alongside emphasized their process of including human input in both their crisis response and LLM development, “you can’t necessarily scale a system like [this] easily because you’re going to run into the need for more and more human review,” continued Torney.

Alongside’s 2024-25 report tracks conflicts in students’ lives, but doesn’t distinguish whether those conflicts are happening online or in person. But according to Friis, it doesn’t really matter where peer-to-peer conflict was taking place. Ultimately, it’s most important to be person-centered, said Dr. Friis, and remain focused on what really matters to each individual student. Alongside does offer proactive skill building lessons on social media safety and digital stewardship.

When it comes to sleep, Kiwi is programmed to ask students about their phone habits “because we know that having your phone at night is one of the main things that’s gonna keep you up,” said Dr. Friis.

Universal mental health screeners available

Alongside also offers an in-app universal mental health screener to school partners. One district in Corsicana, Texas — an old oil town situated outside of Dallas — found the data from the universal mental health screener invaluable. According to Margie Boulware, executive director of special programs for Corsicana Independent School District, the community has had issues with gun violence, but the district didn’t have a way of surveying their 6,000 students on the mental health effects of traumatic events like these until Alongside was introduced.

According to Boulware, 24% of students surveyed in Corsicana, had a trusted adult in their life, six percentage points fewer than the average in Alongside’s 2024-25 report. “It’s a little shocking how few kids are saying ‘we actually feel connected to an adult,’” said Friis. According to research, having a trusted adult helps with young people’s social and emotional health and wellbeing, and can also counter the effects of adverse childhood experiences.

In a county where the school district is the biggest employer and where 80% of students are economically disadvantaged, mental health resources are bare. Boulware drew a correlation between the uptick in gun violence and the high percentage of students who said that they did not have a trusted adult in their home. And although the data given to the district from Alongside did not directly correlate with the violence that the community had been experiencing, it was the first time that the district was able to take a more comprehensive look at student mental health.

So the district formed a task force to tackle these issues of increased gun violence, and decreased mental health and belonging. And for the first time, rather than having to guess how many students were struggling with behavioral issues, Boulware and the task force had representative data to build off of. And without the universal screening survey that Alongside delivered, the district would have stuck to their end of year feedback survey — asking questions like “How was your year?” and “Did you like your teacher?”

Boulware believed that the universal screening survey encouraged students to self-reflect and answer questions more truthfully when compared with previous feedback surveys the district had conducted.

According to Boulware, student resources and mental health resources in particular are scarce in Corsicana. But the district does have a team of counselors including 16 academic counselors and six social emotional counselors.

With not enough social emotional counselors to go around, Boulware said that a lot of tier one students, or students that don’t require regular one-on-one or group academic or behavioral interventions, fly under their radar. She saw Alongside as an easily accessible tool for students that offers discrete coaching on mental health, social and behavioral issues. And it also offers educators and administrators like herself a glimpse behind the curtain into student mental health.

Boulware praised Alongside’s proactive features like gamified skill building for students who struggle with time management or task organization and can earn points and badges for completing certain skills lessons.

And Alongside fills an important gap for staff in Corsicana ISD. “The amount of hours that our kiddos are on Alongside…are hours that they’re not waiting outside of a student support counselor office,” which, because of the low ratio of counselors to students, allows for the social emotional counselors to focus on students experiencing a crisis, said Boulware. There is “no way I could have allotted the resources,” that Alongside brings to Corsicana, Boulware added.

The Alongside app requires 24/7 human monitoring by their school partners. This means that designated educators and admin in each district and school are assigned to receive alerts all hours of the day, any day of the week including during holidays. This feature was a concern for Boulware at first. “If a kiddo’s struggling at three o’clock in the morning and I’m asleep, what does that look like?” she said. Boulware and her team had to hope that an adult sees a crisis alert very quickly, she continued.

This 24/7 human monitoring system was tested in Corsicana last Christmas break. An alert came in and it took Boulware ten minutes to see it on her phone. By that time, the student had already begun working on an assessment survey prompted by Alongside, the principal who had seen the alert before Boulware had called her, and she had received a text message from the student support council. Boulware was able to contact their local chief of police and address the crisis unfolding. The student was able to connect with a counselor that same afternoon.

You Might Also Like

Advice From a Friendship Coach: How to Turn an Acquaintance into a Friend

In a conversation with Life Kit, Vellos shares insights on how to turn a stranger into a friend, based on...

How Immigration Raids Traumatize Even the Youngest Children

“Kids know about people being taken, and they worry. That diffused fear just spreads,” said Joanna Dreby, a professor of...

Easy A’s, Lower Pay: Grade Inflation’s Hidden Damage

But its findings are striking and build the argument against raising grades. Slide from Feb 3, 2026 presentation by economist...

How the New Dietary Guidelines Could Impact School Meals

In early January, the Department of Health and Human Services and the USDA unveiled new Dietary Guidelines for Americans, along...