Amid ongoing concerns around the harms caused by social media, especially to young children, various U.S. states are now implementing their own laws and regulations designed to curb such wherever they can.

But the various approaches underline the broader challenge in policing social media misuse, and protecting kids online.

New York is the latest state to implement child protection laws, with New York Governor Kathy Hochul today signing both the “Stop Addictive Feeds Exploitation (SAFE) for Kids” act and a Child Data Protection Act.

The Stop Addictive Feeds act is the more controversial of the two, with the bill intended to “prohibit social media platforms from providing an addictive feed to children younger than 18 without parental consent.”

By “addictive feed”, the bill is seemingly referring to all algorithmically-defined news feeds within social apps.

From the bill:

“Addictive feeds are a relatively new technology used principally by social media companies. Addictive feeds show users personalized feeds of media that keep them engaged and viewing longer. They started being used on social media platforms in 2011, and have become the primary way that people experience social media. As addictive feeds have proliferated, companies have developed sophisticated machine learning algorithms that automatically process data about the behavior of users, including not just what they formally “like” but tens or hundreds of thousands of data points such as how long a user spent looking at a particular post. The machine learning algorithms then make predictions about mood and what is most likely to keep each of us engaged for as long as possible, creating a feed tailor-made to keep each of us on the platform at the cost of everything else.”

If these new regulations are enacted, social media platforms operating within New York would no longer be able to offer algorithmic news feeds to teen users, and would instead have to provide alternative, algorithm-free versions of their apps.

In addition, social platforms would be prohibited from sending notifications to minors between the hours of 12:00am and 6:00am.

To be clear, the bill hasn’t been implemented as yet, and is likely to face challenges in getting full approval. But the proposal’s intended to offer more protection for teens, and ensure that they’re not getting hooked on the harmful impacts of social apps.

Various reports have shown that social media usage can be particularly harmful for younger users, with Meta’s own research indicating that Instagram can have negative effects on the mental health of teens.

Meta has since refuted those findings (its own), by noting that “body image was the only area where teen girls who reported struggling with the issue said Instagram made it worse.” But even so, many other reports have also pointed to social media as a cause of mental health impacts among teens, with negative comparison and bullying among the chief concerns.

As such, it makes sense for regulators to take action, but the concern here is that without overarching federal regulations, individual state-based action could create an increasingly complex situation for social platforms to operate.

Indeed, already we’ve seen Florida implement laws that require parental consent for 14 and 15-year-olds to create or maintain social media accounts, while Maryland has also proposed new regulations that would restrict what data can be collected from young people online, while also implementing more protections.

On a related regulatory note, the state of Montana also sought to ban TikTok last year, based on national security concerns, though that was overturned before it could take effect.

But again, it’s an example of state legislators looking to step in to protect their constituents, on elements where they feel that federal policy makers are falling short.

Unlike in Europe, where EU policy groups have formed wide-reaching regulations on data usage and child protection, with every EU member state protected under its remit.

That’s also caused headaches for the social media giants operating in the region, but they have been able to align with all of these requests, which has included things like an algorithm-free user experience, and even no ads.

Which is why U.S. regulators know that these requests are possible, and it does seem like, eventually, pressure from the states will force the implementation of similar restrictions and alternatives in the region.

But really, this needs to be a national approach.

There needs to be national regulations, for example, on accepted age verification processes, national agreement on the impacts of algorithmic amplification on teens and whether they should be allowed, and possible restrictions on notifications and usage.

Banning push notifications does seem like a good step in this regard, but it should be the White House establishing acceptable rules around such, and should not be left to the states.

But in the absence of action, the states are trying to implement their own measures, most of which will be challenged and defeated. And while the Senate is debating more universal measures, it seems like a lot of responsibility is falling to lower levels of government, which are spending time and resources on problems that they shouldn’t be held to account to fix.

Essentially, these announcements are more a reflection of frustration, and the Senate should be taking note.

You Might Also Like

How the TikTok Algorithm Works [Infographic]

Is TikTok part of your holiday marketing plan? While the app remains a focus of regulatory concerns, due to its...

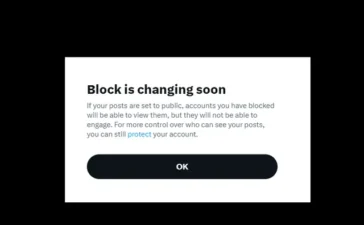

X Moves to the Next Stage of Diluting the Power of Blocking

X is moving closer to removing the block functionality, which it’s been exploring for over a year, ever since platform...

Google Highlights Top Halloween Search Trends

Seeking some extra inspiration for your Halloween outfit or promotion? This will help. Google has launched the latest version of...

X Avoids EU ‘Gatekeeper’ Designation and Requirements

Yeah, I’m not sure that this is the win that Elon Musk and Co. seem to think that it is....